If you sit down with a group of software developers and have a couple of beers, eventually people start telling war stories, and one of them will be about DateTime values. The best cure for those problems are to include a time zone when you record a datetime value, and the easiest way to do that is to use a DateTimeOffset. I’m going to show you how to add time zones to a React application, and utilize the power of DateTimeOffsets.

Author: jzuerlein

Deadlocks…Avoid them by only having one save method in your repository.

I’ve been trying to figure out the cause of a SQL deadlock problem, and it dawned on me that preventing deadlocks shouldn’t be that hard. You just have to save data in the same order every time, and the easiest way to do that is to have a repository with one save method. I’ll show you what a deadlock is, and how to prevent them.

What’s the difference between Span of T and Memory of T?

What’s the difference between Span of T and Memory of T? My goal is not to tell you how awesome they are, but to get you over the hump of understanding how they work. I cover what they do and why, along with some description of their internals. Finally, I discuss what the owner consumer model is, and why it’s relevant to Memory of T.

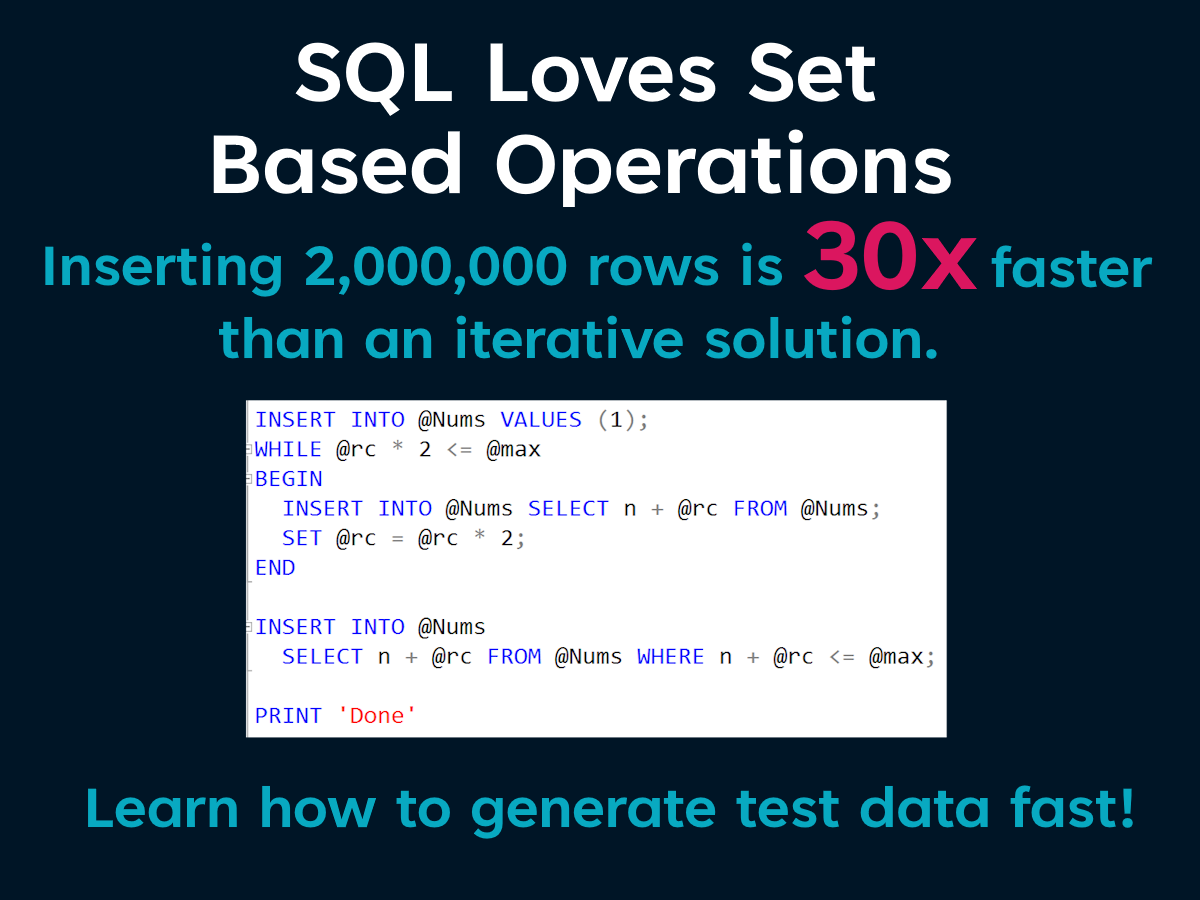

Loading Millions Of Rows Of Test Data In Seconds

Most tools that generate test data do so iteratively. But SQL loves set based operations. With a little T-SQL know how, you can create millions of rows of test data in seconds. You can even leverage public data sources to create more realistic data that conforms to your applications business rules. No third party tools, just a handful of queries. I’m keeping it simple.

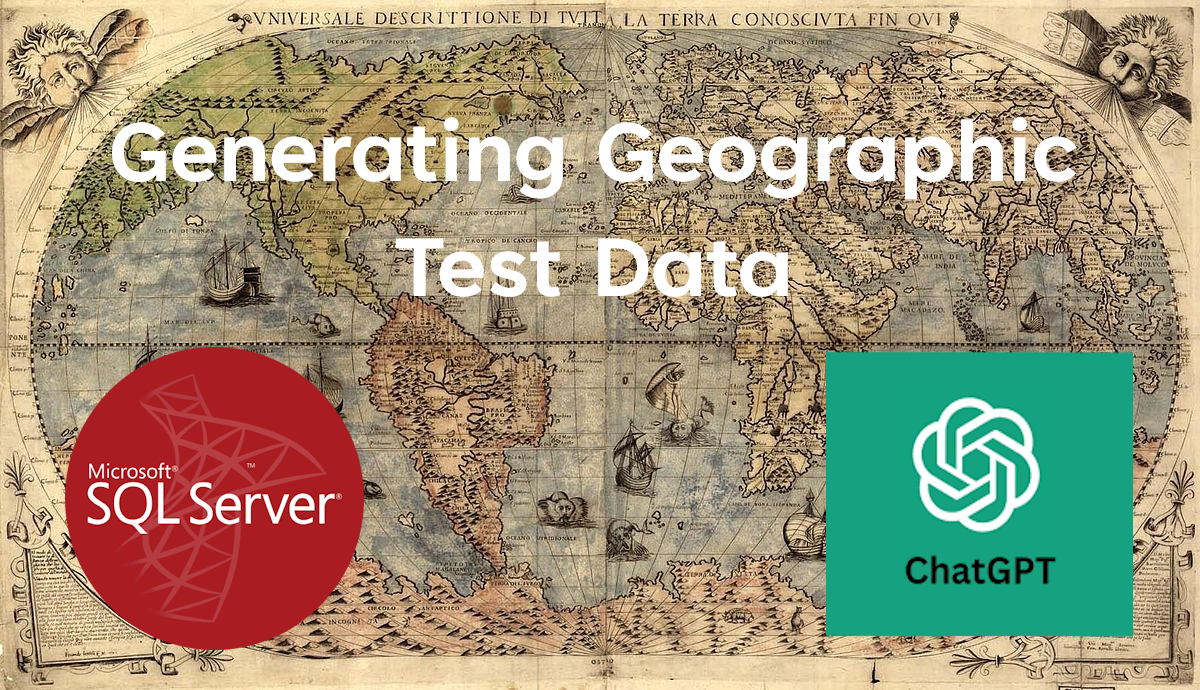

Generating Geographic Test Data With T-SQL and ChatGPT

When you need lots of test data (100K+) for addresses that represent real places you could leverage ChatGPT and some T-SQL wizardry.

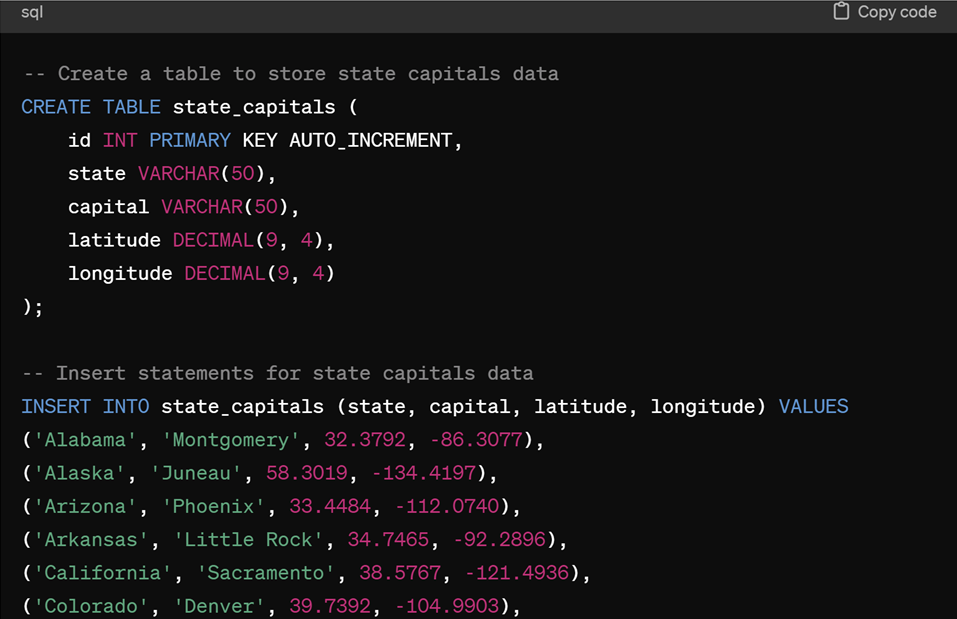

To do this I asked ChatGPT to give me a list of all the US state capitols and include their longitude and latitude, then turn the data into a TSQL insert statement.

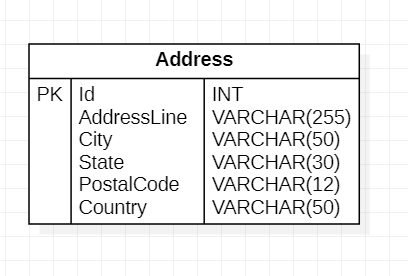

That gives me the longitude and latitude of a point in each state. I created an Address table that will store a list of addresses.

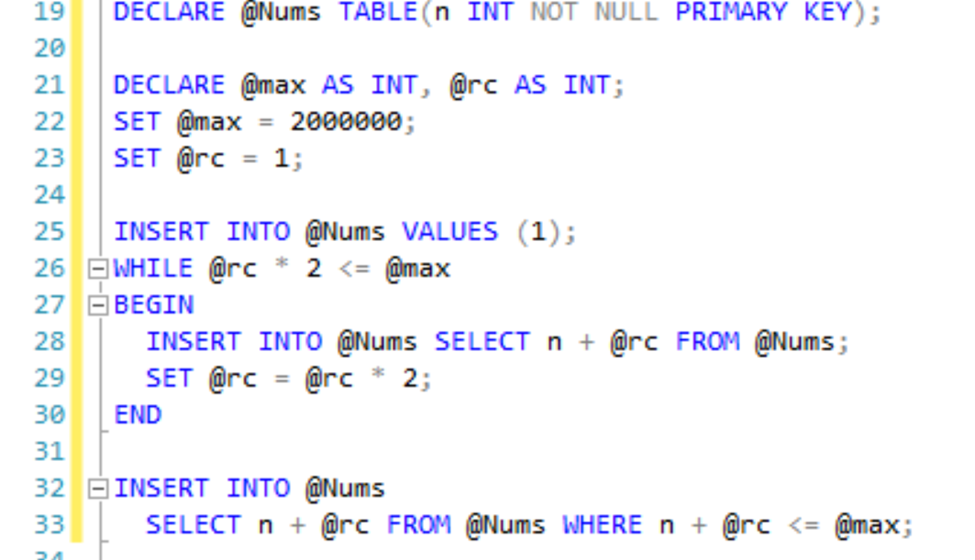

I want to create 100000 rows in the address table. SQL is really good at manipulating sets of data, so I want to insert rows into the table with a single insert statement, to make the process faster. To do that I’m going to create a table variable and populate it with a list of integers. Lots of integers.

Once the table is created, I can insert a single row to seed the table, with the number 1. A while loop can be used to double the number of inserts over each iteration by selecting all the rows in the table and multiplying the integer by 2.

Now that we have a table of integers from 1 to 2,000,000, I can use the table to create new data.

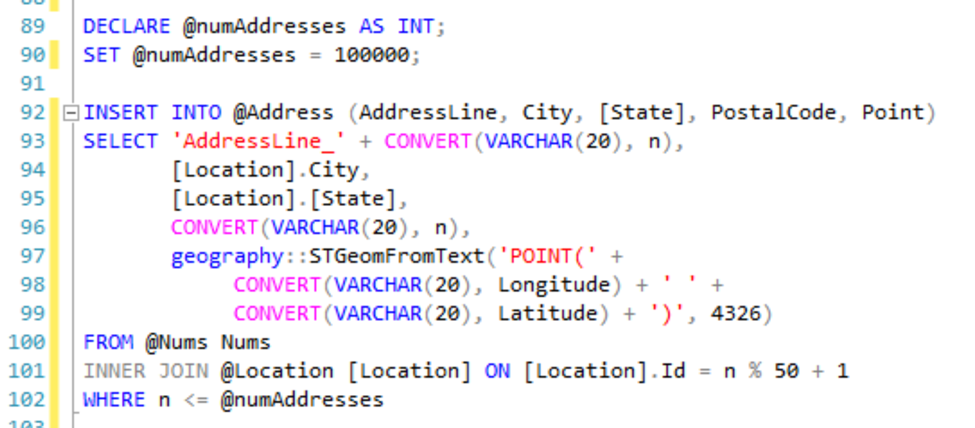

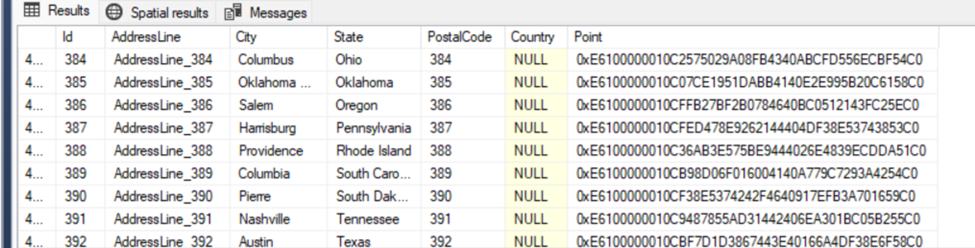

I’ll select all the rows from the @Nums table where the number is less than or equal to the number of addresses I want to create. In this case 100,000. I also want to join with the table of state capitals, so I can use the city, state, longitude and latitude fields to populate the data. I can use the modulus operator to match with one of the 50 rows in the state capitols table.

To convert the longitude and latitude to a geography column type, I can use the STGeomFromText function. If you needed locations with more variance, you could add a small random value to the longitude and latitude of each row as it’s inserted into the address table. The script took about 15 seconds to populate the table, but most of the time was in calculating the geography value, so using the table of numbers to generate data is very fast.

Accelerate By Sorting

A fundamental axiom of computer science is that you cannot make a computer go faster, you can only reduce the work it has to do. One of the most common ways to do less work is to sort the things your code is processing. While experimenting with different ways to populate a set of aggregate roots, I found that the simple act of sorting the results from the database could make a significant difference in performance.

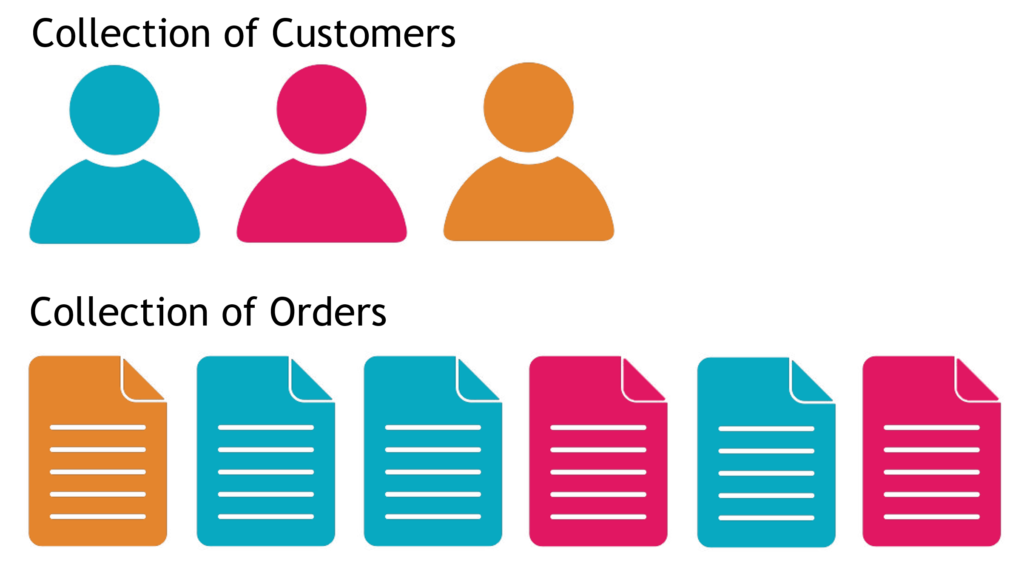

Normally when I have a set of parent objects, each having a collection of child objects, I’ll use a foreach loop to iterate over the parent collection. A LINQ where predicate provides an easy way to filter which children belong to with their parents. The problem with this is that using the LINQ where predicate creates more allocations than are necessary, and it loops over all the child objects once for each parent object.

foreach (var customer in customers)

{

_setOrders.SetValue(customer, orders.Where(_ => _.CustomerId == customer.CustomerId.Value).ToList());

}

If my child collection was sorted by the parent identifier, I would only have to loop over the child collection once. Let’s look at what that looks like.

position = 0;

for (var i = 0; i < customers.Length; i++)

{

while (position < orders.Length && orders[position].CustomerId == customers[i].CustomerId)

{

customers[i].Orders.Add(orders[position]);

position++;

}

}

The counter is defined outside the for each loop, and does not get reset to the beginning of the child collection with each iteration of the parent collection. Doing this eliminates having to iterate over the part of the child collection that has already been covered. Once the element of the child collection doesn’t match with the current parent element, we can advance to the next parent element.

Using the nested while statement with a counter may reduce the readability of the code, but it’s not overly complex, and the performance benefit speaks for itself.

So how much of a performance boost do you get from using this technique? Obviously it depends on how many objects are in the collections. If there is only one parent and one child, it will be slower, but not by much. It doesn’t take long to sort a collection of one.

Using a collection of 100 parents, 1000 children, and 10,000 grandchildren. There was a 50X improvement. Iterating over 10,000 grandchildren 1,000 times makes a big difference.

The next question that should spring to mind is how relevant are the time savings when making out of process calls to a database. Again it varies, but with sample data, I’m seeing a 3X improvement.

My suggestion is if you identify a situation where you need a performance improvement that involves iterating over a collection to find set of items….sort it. The impact may surprise you.

Supercharged Dapper Repositories

Part #1: Separate Filters From Queries

Dapper has been a really popular data access library for over a decade, but when it comes to use it with domain entities, there are a few things that can create friction. In this series, I want to show you how to supercharge your Dapper repositories to be faster and more maintainable.

Sample Code: https://github.com/JZuerlein/DapperPopulateAggregate

PowerPoint Makes YouTube Videos?

I want to spend time creating useful content, not editing video. PowerPoint has a great feature to record and include video in a presentation. It’s super simple, and lets you promote yourself and your ideas!

Paging Data With SQL Server and C#

Learn about several strategies to improve the performance of paging data with .Net and SQL Server, as well as different ways to measure the impact of your software.

NPI data is available at CMS.gov https://download.cms.gov/nppes/NPI_Files.html

SqlConnection Statistics For SQL Server https://learn.microsoft.com/en-us/dotnet/framework/data/adonet/sql/provider-statistics-for-sql-server

Jeff Zuerlein https://www.linkedin.com/in/jeff-zuerlein-2aa67b7/

Chicago C# Web Developers Group

Thank you to Paul Ebreo for organizing last nights Meetup!

If you would like to take a look at the sample code or the slide deck from last night’s presentation…Here it is!